Diffusion Model

입력 이미지에 (정규 분포를 가진) Noise를 여러 단계에 걸쳐 추가하고, 여러 단계에 걸쳐 (정규 분포를 가진) Noise를 제거함으로써, 입력 이미지와 유사한 확률 분포를 가진 결과 이미지를 생성하는 모델

SHAP(Shapley Additive exPlanations)

Shapley Value란, 게임이론을 바탕으로 Game에서 각 Player의 기여분을 계산하는 방법

Shapley Value는 전체 성과(판단)을 창출하는 데 각 feature가 얼마나 공헌했는지 수치로 표현

SHAP : Shapley Value 의 Conditional Expectation (조건부 기댓값)

기계학습의 출력결과를 게임이론으로 설명하는 이론적인 접근방법...

LIME(Local Interpretable Model-agnostic Explanation)

LIME은 model agnostic한 XAI 알고리즘으로, 모델의 종류와 무관하게 적용 가능

tabular 데이터 뿐만 아니라 이미지, 자연어 등 다양한 데이터 타입을 받아들임

모델의 지역적인 결정경계에 선형 모델과 같은 glassbox 알고리즘을 학습시킴. 이 모델을 대리 모델(surrogate model)이라고 하는데, 이 대리 모델의 계수를 사용해 설명 가능성 제공

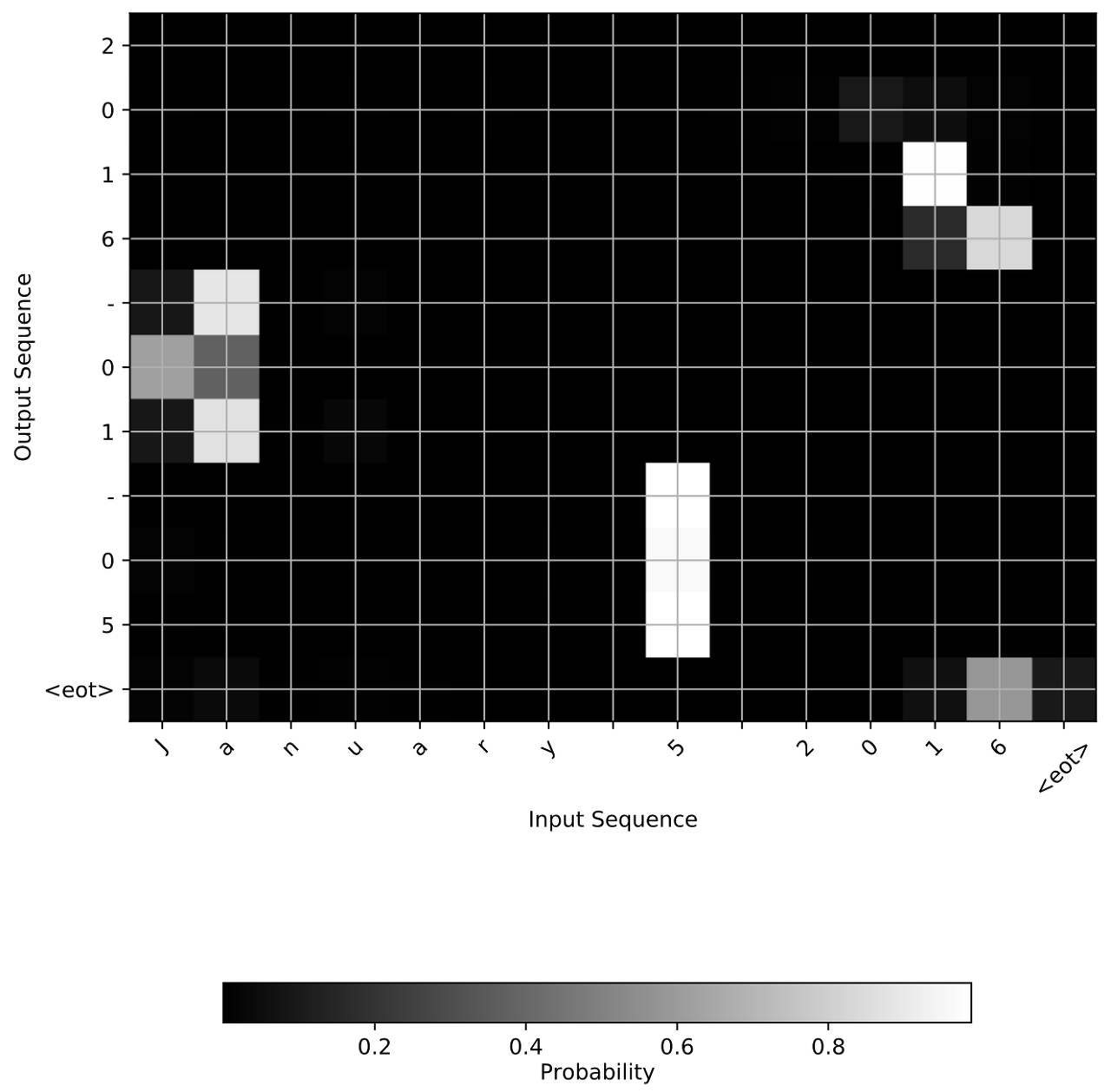

어텐션 시각화(Attention Visualization)

신경망이 어떻게 번역하는지 직관을 얻고자

어떤 입력문자가 결과문자를 예측하는데 중요한지 보여주는 맵(map)을 생성

컨퍼런스 참고 @@

PyTorch Conference 2023

PyTorch

An open source machine learning framework that accelerates the path from research prototyping to production deployment.

pytorch.org

Stanford Graph Learning Workshop 2023

NeurIPS 2022 논문 중 그래프에 Diffusion model 활용한 연구

Analyzing Data-Centric Properties for Graph Contrastive Learning

Keywords: unsupervised representation learning, generalization, graph neural networks, augmentation, invariance, contrastive learning, self supervised learning

Abstract: Recent analyses of self-supervised learning (SSL) find the following data-centric properties to be critical for learning good representations: invariance to task-irrelevant semantics, separability of classes in some latent space, and recoverability of labels from augmented samples. However, given their discrete, non-Euclidean nature, graph datasets and graph SSL methods are unlikely to satisfy these properties. This raises the question: how do graph SSL methods, such as contrastive learning (CL), work well? To systematically probe this question, we perform a generalization analysis for CL when using generic graph augmentations (GGAs), with a focus on data-centric properties. Our analysis yields formal insights into the limitations of GGAs and the necessity of task-relevant augmentations. As we empirically show, GGAs do not induce task-relevant invariances on common benchmark datasets, leading to only marginal gains over naive, untrained baselines. Our theory motivates a synthetic data generation process that enables control over task-relevant information and boasts pre-defined optimal augmentations. This flexible benchmark helps us identify yet unrecognized limitations in advanced augmentation techniques (e.g., automated methods). Overall, our work rigorously contextualizes, both empirically and theoretically, the effects of data-centric properties on augmentation strategies and learning paradigms for graph SSL.

ICLR Poster 논문 - 그래프에 Diffusion model 활용한 연구

Diffusion Probabilistic Models for Structured Node Classification

Keywords: diffusion model, graph neural network, structured prediction, node classification

Abstract:

This paper studies structured node classification on graphs, where the predictions should consider dependencies between the node labels. In particular, we focus on solving the problem for partially labeled graphs where it is essential to incorporate the information in the known label for predicting the unknown labels. To address this issue, we propose a novel framework leveraging the diffusion probabilistic model for structured node classification (DPM-SNC). At the heart of our framework is the extraordinary capability of DPM-SNC to (a) learn a joint distribution over the labels with an expressive reverse diffusion process and (b) make predictions conditioned on the known labels utilizing manifold-constrained sampling. Since the DPMs lack training algorithms for partially labeled data, we design a novel training algorithm to apply DPMs, maximizing a new variational lower bound. We also theoretically analyze how DPMs benefit node classification by enhancing the expressive power of GNNs. We extensively verify the superiority of our DPM-SNC in diverse scenarios, which include not only the transductive setting but also the inductive setting.

'TIL(Today I Learned)' 카테고리의 다른 글

| TIL 231123 OpenAI 세상 구경하기 (1) | 2023.11.23 |

|---|---|

| TIL 231110 - Mistral 7B, OpenAI Dev day (0) | 2023.11.10 |

| TIL - 231107 한국어 오픈소스 언어모델 LLM 탐구하기 (1) | 2023.11.07 |

| TIL - 231031 (0) | 2023.10.31 |

| TIL - 231025 (0) | 2023.10.25 |